How to Use Gradient Boosting for Supervised Learning Tasks

- IOTA ACADEMY

- Feb 20

- 4 min read

A potent machine learning method that is frequently applied to supervised learning problems, such as regression and classification, is gradient boosting. It is an ensemble learning technique that improves overall accuracy by building models one after the other to fix the mistakes of earlier models. Gradient Boosting, its operation, when to use it, and how to implement it in Python are all thoroughly explained in this blog.

What is Gradient Boosting?

Gradient Boosting is an iterative machine learning approach that combines several weak models, usually decision trees, to increase prediction accuracy. Gradient Boosting constructs trees in a sequential fashion, with each tree concentrating on fixing the mistakes caused by the preceding trees, in contrast to bagging techniques like Random Forest, which train trees separately in simultaneously.

Key Features of Gradient Boosting:

The main characteristics of gradient boosting are its ensemble nature, which builds a powerful learner by combining several weak learners (decision trees).

Each model is trained in turn, fixing the mistakes of the one before it.

It uses gradient descent to minimize a loss function.

Performs well on challenges involving both regression and classification.

Has a higher prediction accuracy than more basic models.

(Learn more about ensemble learning techniques here: Ensemble Learning)

How Does Gradient Boosting Work?

Gradient Boosting works by iteratively training new models to correct the residual errors of previous models. The process involves the following steps:

Step 1: Initialize the Model

Usually a short decision tree, the first model is a weak learner.

This initial model forecasts the target variable's mean for regression.

It forecasts the target classes' log-odds for categorization.

Step 2: Compute Residual Errors

The discrepancies between actual and predicted values, or residual errors, are calculated.

These residual errors are predicted by training the subsequent model.

Step 3: Train a Weak Learner on Residuals

To reduce the residual mistakes, a decision tree—a new weak learner—is trained.

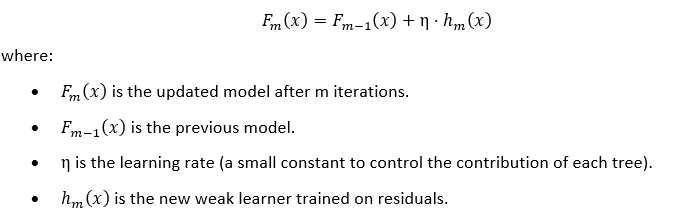

Step 4: Update the Model

The forecasts of the prior model are supplemented with the predictions of the new weak learner, multiplied by a tiny learning rate.

By taking this step, overfitting is avoided and updates are made gradually.

Step 5: Repeat Until Convergence

Until the error stops reducing, or for a predetermined number of rounds, the operation is repeated.

(Read more about Gradient Boosting algorithms: Gradient Boosting)

Mathematical Formulation

The final model is obtained by summing the predictions of all weak learners:

(Explore mathematical details here: Gradient Boosting Explained)

When to Use Gradient Boosting?

Gradient Boosting is used in sectors including e-commerce, healthcare, and finance because it works well on a variety of supervised learning tasks.

Use Gradient Boosting When:

When the dataset has intricate patterns that basic models are unable to capture, use gradient boosting.

For example, fraud detection and medical diagnostics require high precision.

Training time is not a huge concern because the dataset is small to medium in size.

When dealing with structured or tabular data, boosting techniques frequently perform better than deep learning models.

Avoid Gradient Boosting When:

The dataset is extremely large, making training computationally expensive.

Simpler models, such as logistic regression, may be more effective when making predictions in real time.

Because boosting may overfit to small data fluctuations, the dataset has too much noise.

(Check out real-world use cases: Gradient Boosting in Finance)

Implementing Gradient Boosting in Python

Gradient Boosting can be implemented using the GradientBoostingClassifier and GradientBoostingRegressor from Scikit-Learn.

Example 1: Gradient Boosting for Classification

In this example, we classify Titanic passengers based on survival probability using the Titanic dataset.

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.ensemble import GradientBoostingClassifier from sklearn.metrics import accuracy_score # Load Titanic dataset url = "https://raw.githubusercontent.com/datasciencedojo/datasets/master/titanic.csv" df = pd.read_csv(url) # Preprocess data df = df[['Pclass', 'Sex', 'Age', 'Fare', 'Survived']].dropna() df['Sex'] = df['Sex'].map({'male': 0, 'female': 1}) # Convert categorical to numerical # Split data X = df[['Pclass', 'Sex', 'Age', 'Fare']] y = df['Survived'] X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train Gradient Boosting model gb_clf = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1, max_depth=3, random_state=42) gb_clf.fit(X_train, y_train) # Make predictions y_pred = gb_clf.predict(X_test) # Evaluate model accuracy = accuracy_score(y_test, y_pred) print(f'Gradient Boosting Accuracy: {accuracy:.4f}') |

Output:

Gradient Boosting Accuracy: 0.7692

(Learn more about Scikit-Learn’s Gradient Boosting: Scikit-Learn Documentation)

Example 2: Gradient Boosting for Regression

This example uses the California Housing dataset to predict house prices.

from sklearn.datasets import fetch_california_housing from sklearn.ensemble import GradientBoostingRegressor from sklearn.metrics import mean_squared_error # Load dataset housing = fetch_california_housing() X, y = housing.data, housing.target # Split data X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train Gradient Boosting model gb_reg = GradientBoostingRegressor(n_estimators=200, learning_rate=0.05, max_depth=4, random_state=42) gb_reg.fit(X_train, y_train) # Make predictions y_pred = gb_reg.predict(X_test) # Evaluate model mse = mean_squared_error(y_test, y_pred) print(f'Gradient Boosting Regression MSE: {mse:.4f}') |

Output:

Gradient Boosting Regression MSE: 0.2594

(More on hyperparameter tuning: Hyperparameter Optimization)

Fine-Tuning Gradient Boosting for Better Performance

Gradient Boosting can be optimized by adjusting hyperparameters.

Hyperparameter | Description | Typical Values |

n_estimators | Number of boosting rounds (trees) | 100-500 |

learning_rate | Step size for each tree | 0.01 - 0.1 |

max_depth | Depth of each tree | 3-7 |

min_samples_split | Minimum samples required to split a node | 2-10 |

subsample | Fraction of data used for each boosting iteration | 0.6 - 1.0 |

max_features | Number of features considered for each split | "sqrt", "log2" |

Tips for Hyperparameter Tuning:

Start with a low learning rate (0.05 or 0.1) and increase n_estimators to improve performance.

Use GridSearchCV or RandomizedSearchCV to find the best combination of hyperparameters.

Set subsample < 1.0 (e.g., 0.8) to introduce randomness and prevent overfitting.

Conclusion

A potent supervised learning method called gradient boosting improves model accuracy by successively fixing prediction errors. It works quite well for problems involving both regression and classification, especially when applied to structured data. To get the best performance, though, hyperparameters must be carefully adjusted.

You can successfully incorporate Gradient Boosting into your own machine learning projects by adhering to the implementation procedures and optimization techniques covered in this guide.

Are you prepared to advance your knowledge of machine learning? Learn sophisticated algorithms like Gradient Boosting, Hyperparameter tuning, and others by enrolling in IOTA Academy's Machine Learning Course right now. To advance your data science career, get practical experience, work on real projects, and receive knowledgeable mentoring.

This is valuable! For anyone aiming to build a career in AI and data analytics, the Ahmedabad Data Science Master Program course is a great option with real-world applications.